UBC Library

Research Commons

A multidisciplinary hub supporting research endeavours, partnerships, and education.

More from the Research Commons at (UBC-V)

And from the Center for Scholarly Communication (UBC-O)

What is web scraping?

A way to extract information from websites

Acquire non-tabular or poorly structured data from a site and convert it to a structured format (.csv, spreadsheet)

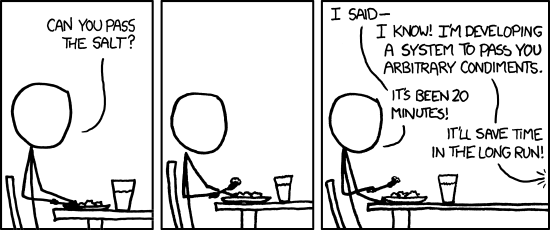

Source: https://xkcd.com/974/

Automate web data retrieval

- identify sites to visit

- define information to look for

- set how far to go

- set scraping frequency

Scraping vs crawling

Crawling. What Google does to index the web - systematically "crawling" through all content on specified sites.

Scraping. More targeted than crawling - identifies and extracts specific content from pages it accesses.

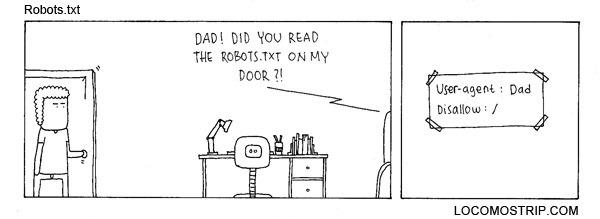

Some sites disallow web scraping with a robots.txt file.

Is scraping the best option?

- is it easy to copy/paste?

- does the site provide an export option?

- is there an API?

Ethics and considerations

Am I allowed to take this data?

Check the website for terms of use that affect web scraping.

Are there restrictions on what I can do with this data?

Making a local copy of publicly available data is usually OK, but there may be limits on use and redistribution (check for copyright statements).

Am I overloading the website's servers?

Scraping practice should respect the website's access rules, often encoded in robots.txt files.

When in doubt ask a librarian or contact UBC's Copyright Office

https://copyright.ubc.ca/support/contact-us/