Privacy, the Environment & Generative AI Tools

“The use of user prompts as training data for Generative AI tools raises significant privacy concerns. When personal information is included in training datasets, it can lead to unintended disclosures and misuse. Users must be aware of how their data is used and protected to ensure their privacy is maintained.” (ChatGPT 4.0, 2024)

The source for this activity is Christian Schmidt’s excellent AI resources.

If you have any questions or get stuck as you work through this in-class GenAI exercise, please ask the instructor for assistance. Have fun!

Privacy & Data Collection in UVic’s Copilot

- The good news is that UVic has turned on all the privacy features in the version of Microsoft Copilot that they have licensed for the UVic community.

- Please click on the green “Protected” button in the top right of the Copilot screen to confirm that you are using the UVic-licensed version, which means that you have strong privacy settings in place that cannot be changed (like they can in if you signed up individually for Copilot).

Privacy & Data Collection in ChgatGPT

- The good news is that you can change the privacy setting in ChatGPT so that your user data and prompts are not used by ChatGPT as training Data.

- Follow the instructions below to stop ChatGPT from using your prompts as training data (note that this will also turn off your chat history which can be an inconvenience to some):

Privacy & Data Collection in Perplexity.ai

- The good news is that you can change the privacy settings in ChatGPT so that your user data and prompts are not used by ChatGPT as training Data.

- Follow the instructions below to stop ChatGPT from using your prompts as training data (note that this will also turn off your chat history which can be an inconvenience to some):

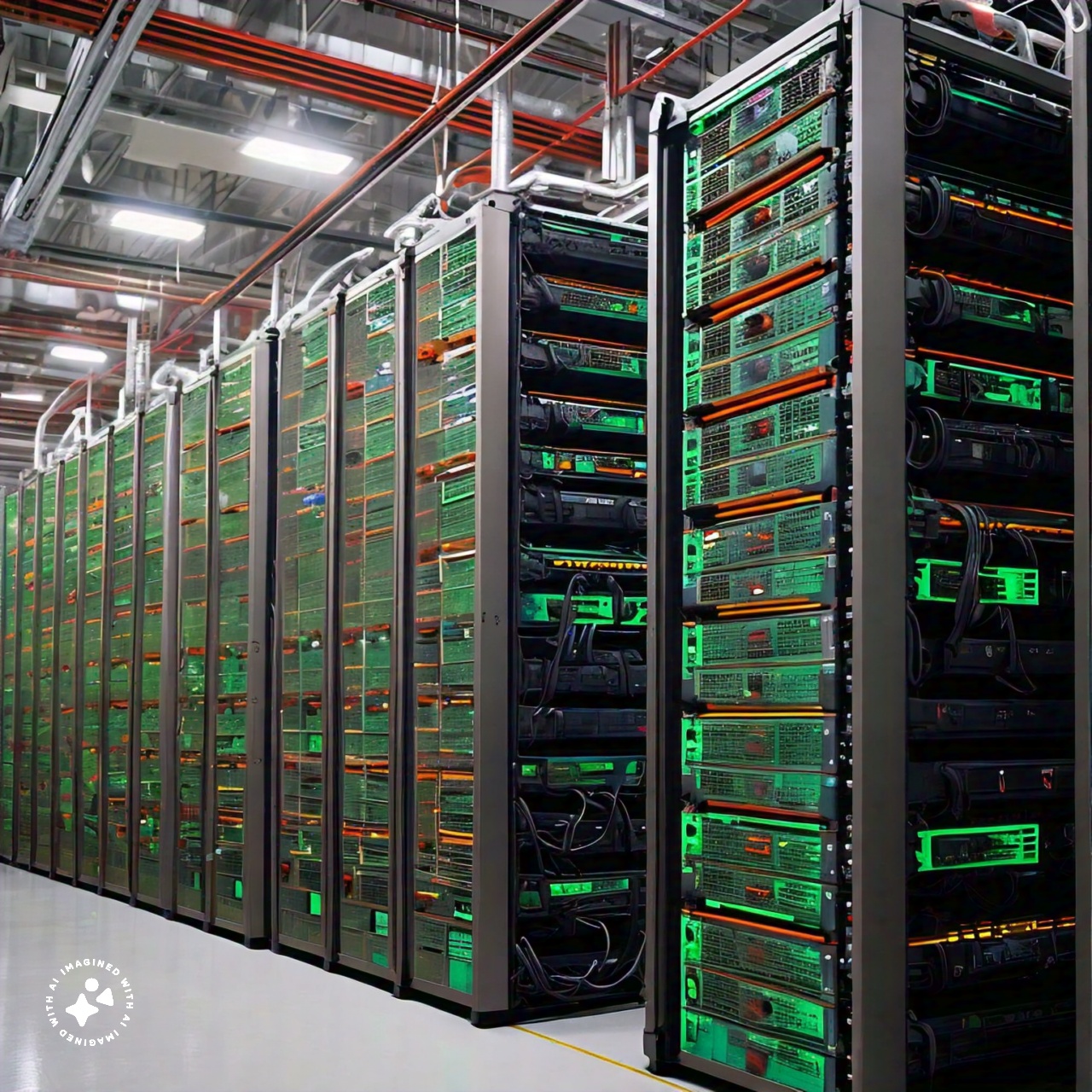

The Environment

The primary environmental impacts of Generative AI largely stem from the significant energy consumption associated with training and operating these models. Here are a few key points to consider:

- Energy Consumption:

- Generative AI models, especially large ones, consume vast amounts of energy during training and inference.

- For instance, OpenAI’s ChatGPT (created in San Francisco, California) is estimated to consume the energy equivalent of 33,000 homes1.

- As AI systems scale up, their energy demands could rival those of entire nations (Nature, 2024).

- Water Usage:

- Cooling data centers and powering AI processors require enormous amounts of fresh water.

- In West Des Moines, Iowa, OpenAI’s data center for its advanced model, GPT-4, used about 6% of the district’s water during training.

- Google and Microsoft also experienced significant spikes in water use while developing their large language models (Nature, 2024).

- Environmental Footprint:

- Generative AI’s pursuit of scale has been called the “elephant in the room” by researchers.

- The industry must prioritize pragmatic actions to limit its ecological impact.

- This includes designing more energy-efficient models, rethinking data center usage, and considering water conservation (Nature, 2024).

- Comparative Benefit-Cost Evaluation:

- MIT researchers emphasize the need for responsible development of generative AI.

- A comparative benefit-cost evaluation framework can guide sustainable practices (MIT, 2024).

- Hidden Costs:

- While generative AI is powerful, its hidden environmental costs warrant attention.

- Companies can take steps to make these systems greener, such as using more efficient models and minimizing energy consumption (HBR, 2023).

The environmental impact of generative AI is a growing area of concern and study, prompting calls for more sustainable practices in the field of artificial intelligence.

NOTE: The text in “The Environment” section of this document was generated by Microsoft CoPilot in April 2024, and was edited by Rich McCue.